- Home

- Billionaires

- Investing Newsletters

- 193CC 1000

- Article Layout 2

- Article Layout 3

- Article Layout 4

- Article Layout 5

- Article Layout 6

- Article Layout 7

- Article Layout 8

- Article Layout 9

- Article Layout 10

- Article Layout 11

- Article Layout 12

- Article Layout 13

- Article Layout 14

- Article Sidebar

- Post Format

- pages

- Archive Layouts

- Post Gallery

- Post Video Background

- Post Review

- Sponsored Post

- Leadership

- Business

- Money

- Small Business

- Innovation

- Shop

Recent Posts

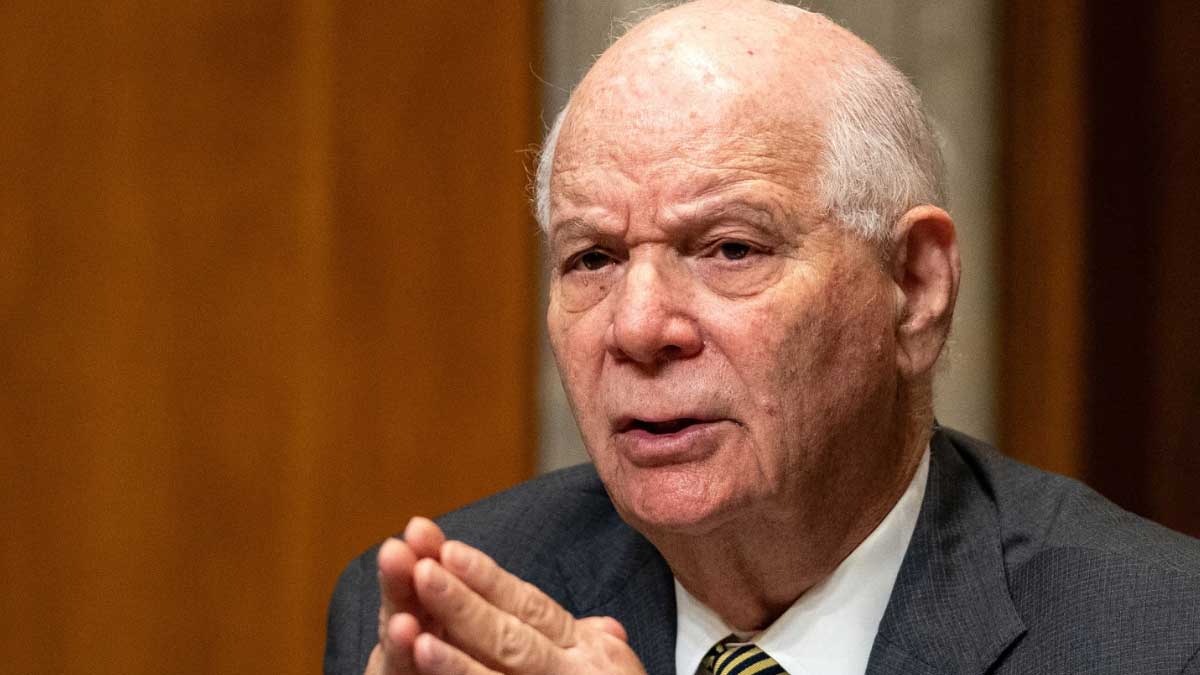

Deepfake Incident: Sen. Cardin Targeted by Spoofing Operation

Senate Foreign Relations Committee Chairman Ben Cardin, a Democrat from Maryland, recently became the target of a sophisticated deepfake video calling scheme that involved a caller impersonating a high-ranking Ukrainian official. Reports emerging late Wednesday reveal the increasing threat posed by AI-generated content in the realm of misinformation and cyber threats. This incident, first disclosed by Punchbowl News and later confirmed by The New York Times, was included in a security alert distributed by the Senate’s security office regarding a Zoom call that Cardin participated in with someone pretending to be Dmytro Kuleba, Ukraine’s former Foreign Minister.

According to two Senate officials who spoke with The Times, Cardin confirmed that he was indeed the senator involved in this troubling scenario. He recounted how a “deceptive attempt” was made to engage him in conversation by someone posing as a recognized individual from Ukraine. The deepfake technology employed in this instance not only altered the caller’s appearance but also convincingly mimicked their voice, making the impersonation alarmingly credible.

Cardin received an email that purported to be from the Ukrainian leader, who had recently stepped down amid a broader cabinet reshuffle. He then connected on Zoom with the individual who appeared to be Kuleba. However, during the conversation, Cardin began to harbor doubts regarding the caller’s authenticity. His suspicion was triggered by the nature of the questions posed, particularly those that were politically charged and focused on the upcoming election. The caller inquired whether Cardin supported the deployment of long-range missiles to strike Russian territory, raising red flags for the senator.

The Senate’s security office took the incident seriously and issued warnings to lawmakers regarding an ongoing “active social engineering campaign” aimed at senators and their staff. This advisory highlights a significant escalation in the sophistication and believability of such attacks, indicating that this is not merely an isolated incident but part of a larger trend of increasingly advanced disinformation tactics.

A key quote from the Senate security notice emphasized the uniqueness of this event, stating, “While we have seen an increase of social engineering threats in the last several months and years, this attempt stands out due to its technical sophistication and believability.” This assertion underscores the necessity for heightened vigilance and awareness among lawmakers and their teams regarding the potential for such deceptive practices.

In the broader context, there has been a rising concern among intelligence officials and technology leaders regarding foreign influence operations targeting the U.S. elections. The Office of the Director of National Intelligence recently issued an assessment noting that foreign actors, particularly from Russia and Iran, are leveraging generative AI technology to bolster their attempts to sway U.S. elections. Despite this troubling trend, the report indicated that, although AI has improved and accelerated certain aspects of foreign influence efforts, it has not yet fundamentally transformed these operations.

Brad Smith, Vice President at Microsoft, provided testimony before a Senate committee last week regarding foreign interference efforts aimed at the November elections. He acknowledged that while the deployment of AI tools in these operations has been less impactful than previously anticipated, the potential for “determined and advanced actors” to refine their use of AI technologies poses an ongoing threat. This suggests that as the technology evolves, so too will the strategies employed by those seeking to manipulate political discourse and public perception.

In light of these events, legislative measures are beginning to take shape in response to the rising tide of disinformation and deepfakes. Last week, California Governor Gavin Newsom signed three significant bills aimed at curbing the distribution of misleading media during election periods. Among these laws is one that makes it illegal to disseminate “materially deceptive audio or visual media of a candidate” within 120 days prior to an election and in some cases, 60 days after. This legislation was prompted by Newsom’s commitment to tackle the challenges posed by political deepfakes, particularly after Elon Musk, the owner of X, shared an altered campaign video featuring Vice President Kamala Harris. The edited video used AI-generated audio to create a misleading narrative about Harris, referring to her as the “ultimate diversity hire” and a “deep state puppet.”

While the California law does provide exceptions for parody—provided that such content is clearly labeled—Musk has criticized these regulations as a form of censorship, arguing that they effectively render parody illegal. This ongoing debate highlights the complexities and challenges of balancing free expression with the necessity of combatting harmful misinformation.

As technology continues to advance, lawmakers and security officials will need to remain vigilant in addressing the threats posed by deepfakes and other forms of AI-generated content. The incident involving Senator Cardin serves as a stark reminder of the potential for such technologies to disrupt the political landscape and the importance of safeguarding democratic processes from manipulation and deceit. The ongoing evolution of disinformation tactics will undoubtedly require ongoing adaptations in both legislative frameworks and security measures to protect against the growing threat posed by sophisticated impersonation attempts in the digital age.

Recent Posts

Categories

- 193cc Digital Assets2

- 5G1

- Aerospace & Defense46

- AI37

- Arts3

- Banking & Insurance11

- Big Data3

- Billionaires449

- Boats & Planes1

- Business328

- Careers13

- Cars & Bikes76

- CEO Network1

- CFO Network17

- CHRO Network1

- CIO Network1

- Cloud10

- CMO Network18

- Commercial Real Estate7

- Consultant1

- Consumer Tech180

- CxO1

- Cybersecurity68

- Dining1

- Diversity, Equity & Inclusion4

- Education7

- Energy8

- Enterprise Tech29

- Events11

- Fintech1

- Food & Drink2

- Franchises1

- Freelance1

- Future Of Work2

- Games141

- GIG1

- Healthcare78

- Hollywood & Entertainment186

- Houses1

- Innovation42

- Investing2

- Investing Newsletters4

- Leadership65

- Lifestyle11

- Manufacturing1

- Markets20

- Media193

- Mobile phone1

- Money13

- Personal Finance2

- Policy567

- Real Estate1

- Research6

- Retail1

- Retirement1

- Small Business1

- SportsMoney33

- Style & Beauty1

- Success Income1

- Taxes2

- Travel10

- Uncategorized8

- Vices1

- Watches & Jewelry2

- world's billionaires418

Related Articles

Trump Moves $4B Stake in Truth Social Parent, Stock Drops 6%

Donald Trump recently transferred his 57% stake in Trump Media & Technology...

By 193cc Agency CouncilDecember 20, 2024House Rejects Trump-Backed Funding Bill, Shutdown Looms

The U.S. House of Representatives rejected a new government funding bill on...

By 193cc Agency CouncilDecember 20, 2024Trump Named Time’s Person of the Year for Second Time

On Thursday, Time magazine honored Donald Trump as its “Person of the...

By 193cc Agency CouncilDecember 12, 2024Meta Donates $1 Million to Trump’s Inaugural Fund

Meta, the parent company of Facebook and Instagram, has confirmed a $1...

By 193cc Agency CouncilDecember 12, 2024

Leave a comment